One problem to explain why AI works

A framework for understanding AI and keeping up with it all.

Ask your resident experts, Why does AI work? Readily, they’ll explain How it works, methods emptying in a mesmerizing jargonfall of gradient descent. But why? Why will an expensive and inscrutable machine create the knowledge I need to solve my problem? A glossary of technical terms, an architectural drawing, or a binder full of credentials will do little to insulate you from the fallout if you can’t stand up and explain Why.

The purpose of AI is to create machines that create good knowledge. Just as a theory of flight is essential to the success of flying machines, a theory of knowledge is essential to AI. And a theoretical basis for understanding AI has greater reach and explanatory power than the applied or technical discussions that dominate this subject.

As we’ll discover, there’s a deep problem at the center of the AI landscape. Two opposing perspectives on the problem give a simple yet far-reaching account of why AI works, the magnitude of the achievement, and where it might be headed.

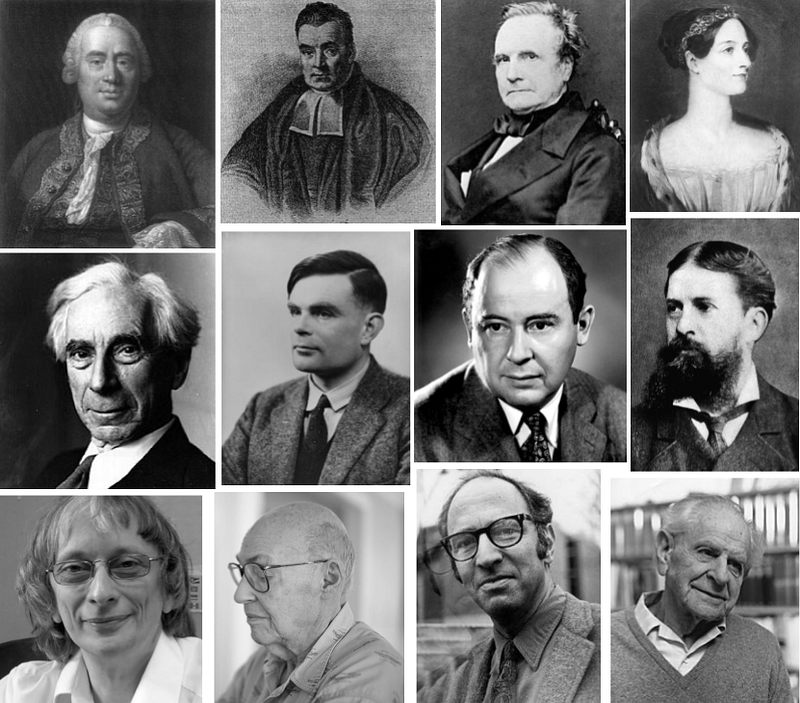

Part 1: Induction as the prevailing theory

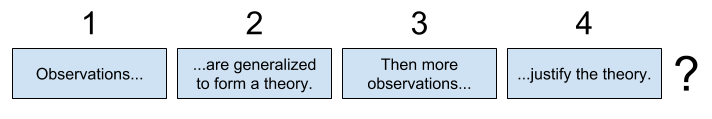

Many overlook the question because it’s obvious how knowledge is created: We learn from observation. This is called inductive reasoning, or induction for short. We observe some phenomena and derive general principles to form a theory. If every raven you’ve observed is black, it’s fair to conclude that all ravens are black. Over time, more black ravens support your theory. Induction is intuitively simple, even irresistible, making it the answer that most people carry.

Machine learning, the most important domain in AI, is also inductive. Our intuitions about knowledge creation and the importance of machine learning inform our working hypothesis of why AI works: It creates knowledge in the same way we all create knowledge, using common sense induction.

“Our best theories are not only truer than common sense, they make far more sense than common sense does.” David Deutsch

But can you really trust induction to create good knowledge? Like all great explorations, to answer that question you first need to wander around a bit to get your bearings.

At the Center of the AI Landscape

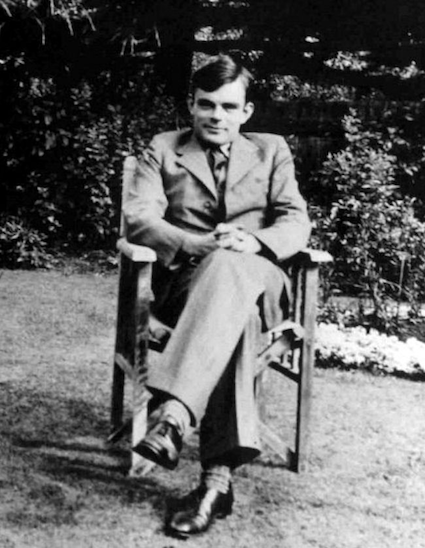

In 1950, Alan Turing wrote a remarkably prescient paper on AI, considering the question of whether machines can think. You’re likely familiar with it for its discussion of the Turing Test. What I find most inspiring about that paper, however, is how comprehensively and enduringly Turing outlined not only the major objections to the feasibility of AI, but also the directions along which the problems might be explored. He identified two very different directions for AI, which serve as geographic coordinates of our landscape. Running north-south is the axis of human-like behaviours, like “the normal teaching of a child”; running east-west, processes of abstract thought, like “the playing of chess.” In the pursuit of AI, Turing opined, “both approaches should be tried.”

Over the intervening decades, people explored Turing’s landscape widely. In their influential textbook on AI, Stuart Russell and Peter Norvig categorize these explorations based on whether the machines are thinking or acting, humanly or rationally (since rationality is an ideal that humans never achieve!). It’s reminiscent of the Buddhist parable of the blind men and an elephant, where each approach is touching only a small part of the whole thing. But in AI, as in life, there are winners and loss functions. Some explorers found abundance; others endured harsh winters.

So which approach prevailed? Across the AI landscape, where are the thriving metropolises and the deserted wastelands? The notion of oneprevailing approach is seriously oversimplified; the AI landscape is remarkably broad and diverse. Yet, those tribes generating the most interest, investment and activity establish landmarks of influence. Here, AI is most readily understood as machines acting rationally to get stuff done. Russell and Norvig weigh the pros and cons of different approaches to AI, concluding the rational agents approach is the best of the lot. “A rational agent is one that acts so as to achieve the best outcome or, when there is uncertainty, the best expected outcome.” Questions of good knowledge are subordinated to questions of good behavior. While rational agents made landfall relatively recently, theirs are the gilded cities in AI.

While Russell and Norvig present a principled, top-down defense of rational agents, the choice is better understood as an exploration, the dogged pursuit of a vision. They summarize the foundations of AI across philosophy, mathematics, economics, neuroscience, psychology, computer engineering, control theory and cybernetics, and linguistics. (When your pursuit takes you across that many state lines, you’re dogged!) In a previous post, I’ve traced how the bottom-up consensus emerged, “a vision of AI as an autonomous, goal-seeking system. By design, this definition of intelligence was crafted within the expectations of how this vision of AI would be realized and measured.” Goal-seeking explorers creating goal-seeking AI.

How might the prevailing tribes answer our question, Why does AI work? Well, it acts so as to achieve the best outcome, of course. What’s more, it works even in harsh, uncertain environments, without the benefit of a map or foreknowledge! But like indefatigable and philosophically precocious children, we can keep asking Why? Why does an autonomous goal-seeking agent create good knowledge?

Induction as the Source of Knowledge

Many would argue a focus on knowledge creation is a fatally narrow view of AI. Russell and Norvig caution, “intelligence requires action as well as reasoning.” What of autonomy and automation, decision-making and control, utility functions and payoffs, integration and engineering? Our paths will cross again, but knowledge comes first. It’s the ground, and whether soft or firm, it dictates whether your AI is in fact intelligent. They acknowledge the primacy of sound theoretical foundations. “The quest for ‘artificial flight’ succeeded when the Wright brothers and others stopped imitating birds and started using wind tunnels and learning about aerodynamics.” I take their analogy seriously: Theory leads practice, and theories of knowledge lead AI.

Russell and Norvig refer to the source of knowledge as induction, including computational approaches for how knowledge is extracted from observations. Induction is a principle of science and the prevailing theory of knowledge creation. Given sufficient data, all the knowledge a system needs to exhibit autonomous, good behaviour may be acquired through learning, given sufficient high quality data. Following numerous winters of hype and disappointments, pragmatic considerations reign. A flexible and efficient acquisition of knowledge, surmounting the “knowledge bottleneck,” is the central problem.

Note how the prevailing theory is wrapped in a method. Shai Shalev-Shwartz and Shai Ben-David explain the theory of machine learningin explicitly methodological terms. “The development of tools for expressing domain expertise, translating it into a learning bias, and quantifying the effect of such a bias on the success of learning is a central theme of the theory of machine learning.” I’ll share another example of this schema at the close of this post. The reduction of theory to method is an idea we’ll encounter frequently as we move across the AI landscape. It has antecedents in the Scientific Method and inspires new computational epistemologies for automated science that we’ll meet as we move further afield.

The Leap From Philosophy

Our introductory tour of the AI landscape would be reprehensibly incomplete if we didn’t stop to behold one final landmark, a majestic cliff in the study of knowledge creation. Russell and Norvig explain, “Philosophers staked out some of the fundamental ideas of AI, but the leap to a formal science required a level of mathematical formalization in three fundamental areas: logic, computation, and probability.” Philosophy without formalization is all well and good until you have to code it, and so they leapt.

Yet, formalisms are embraced within analytic philosophy so this requirement in itself doesn’t explain the leap. Russell and Norvig stress their short history is necessarily incomplete, but this isn’t a symptom of brevity. Rather theirs is an accurate portrait of a historical split, as if the foundational work in AI marks a break with modern philosophy. While that’s a broad brush, it does capture the popular sentiment. The dismissal of philosophy is widespread, particularly among technical people.

The computer scientist Scott Aaronson agrees that the original purpose behind the founding work in computer science was the clarification of philosophical issues. Yet, in his discussion of the intersections of computational complexity and philosophy, he emphasizes that parallel investigations in technical disciplines don’t diminish the importance of philosophy or excuse the dismissal of philosophical pursuits. “Indeed, one of my hopes for this essay is that computer scientists, mathematicians, and other technical people who read it will come away with a better appreciation for the subtlety of some of the problems considered in modern analytic philosophy.” In a previous post, I’ve argued that there is much to be gained by remaining open to the contributions of philosophy.

Did the “leap to formal science” leap over competing theories of knowledge creation? One problem in particular is anything but subtle, and resides right at the center of the AI landscape. The principle of induction is often conflated with the uniformity principle of nature, which in turn casts induction as a principle of science. Induction, however, is better understood as a problem, where it finds its historical roots and full context. Guidance on how to manage this problem is what fills the textbooks and directs methodology. And while there is much to learn from the prevailing approach, particularly in the values of pragmatism and experimentation, AI cannot be explained until the problem of induction is understood.

The Problem of Induction

The problem of induction has been debated over centuries, most notably in the writings of David Hume. While I could never do justice to that volume of criticism, I want to give you a sense through a few examples of how these issues erode the commonsensical allure of the theory. The problem is that induction seems to play a central role in science and everyday life, but it also seems prone to produce bad knowledge. Therefore, how can induction be justified?

Well, let me count the ways. No, I mean seriously, just count the ways. If your theory is that all ravens are black, you can keep counting ravens until you find a white one. For good measure, we might also count all the non-black things we see that are not ravens. White shoes aren’t black ravens, so they support our theory, too! (But of course, they also support the theory that ravens are blue.) This is the raven paradox, or Hempel’s paradox, named for its originator, Carl Hempel. In a future post, we’ll meet some of the clever people, including Bayesians, who chiselled this paradox down to size. Here, it’s sufficient to note that counting evidence to confirm your theory is like preaching to the cosmic choir.

Another striking wrinkle on the problem of induction is the so-called “New Riddle of Induction,” from the influential philosopher Nelson Goodman. His riddle introduced these imaginary “grue” and “bleen” objects that change colour over time, highlighting the difference between lawlike and non-lawlike statements, not to mention the ambiguities of language. How do we know some phenomena can be generalized to a lawlike regularity? How do we know what we’ve observed in the past will continue in the future?

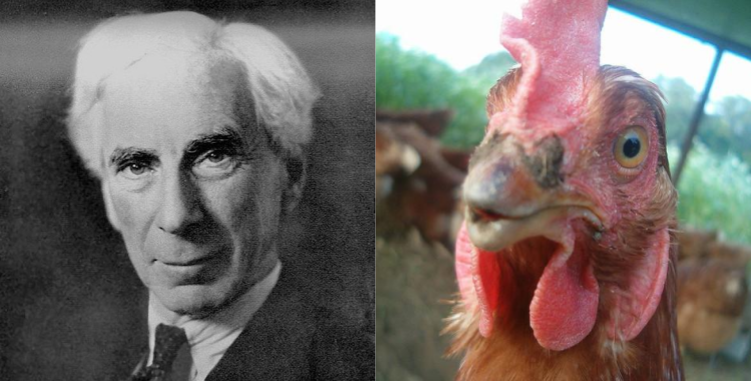

Well, belief in the uniformity of nature underpins the entire scientific effort. When people speak about the principle of induction, this is usually what they’re really saying. What, you think the sun will stop rising? However, this uniformity clearly doesn’t extend to our observations of nature. The philosopher and mathematician Bertrand Russell famously explained this problem through the parable of a chicken that observed the farmer bringing him food every day. From these observations, the chicken induced the theory that the farmer would continue to feed him. The chicken’s inductive theory died violently with the chicken! So too have machine learning projects that failed to see bias in their data’s free lunch.

John Vickers contrasts the problem of induction with deductive reasoning, where according to the rules of logic, the premises imply the argument’s conclusion. “Not so for induction: There is no comprehensive theory of sound induction, no set of agreed upon rules that license good or sound inductive inference, nor is there a serious prospect of such a theory.”

Now, the prevailing AI tribes would beg to differ! There are sound inductive systems, manifest in powerful and fully autonomous machines. And they truly are marvels of science and engineering. But in order to understand the magnitude of these accomplishments, you must first recognize the thorny problem that induction poses. Attempts to manage the problem of induction are revealed as a central challenge of AI.

That said, Vickers is right about the comprehensive part. The problem of induction takes a mathematical form in David Wolpert and William Macread’s no free lunch theorems. They explain, “if an algorithm does particularly well on average for one class of problems then it must do worse on average over the remaining problems.” In other words, there is no universal learner that works well across all problems. Today’s AI is an idiot savant; extraordinary adept at specific problems but generally inept. At least for now.

Respecting the depth of the problem avoids the contentious idea that induction is a principle. Nature is uniform but observations are fuzzy. The principle that future observations resemble the past is an assumption that inductive systems require to work effectively. Unfortunately, the uniformity of nature says nothing about a particular set of observations, ravens or chickens.

So how do we cope with the problem of induction? We leverage existing knowledge. Shalev-Shwartz and Ben-David explain, “We can escape the hazards foreseen by the No-Free-Lunch theorem by using our prior knowledge about a specific learning task, to avoid the distributions that will cause us to fail when learning that task.” This is the essential connection between existing knowledge and learning. To observe intelligently requires explanations.

Part 2: The power of explanations

Now that we have some perspective on the centrality and problem of induction in AI, I want to turn to an opposing anti-inductive theory. Science is our most successful knowledge-creating enterprise. Karl Popper is one of the most influential thinkers in the philosophy of science. Therefore, it’s important, if not central, to consider how Popper’s philosophy explains why AI works.

“I think that I have solved a major philosophical problem: the problem of induction.” Karl Popper

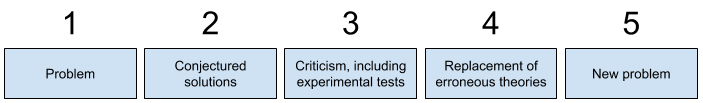

Popper’s solution to the problem of induction is that knowledge is in fact not derived from experience. While it’s true that experience and experimentation play an important role in knowledge creation, his emphasis is on problems. We create explanations and conjectures about how the world works. (Conjectures are informed guesses; the bolder the better.) Competing explanations square off in rounds of criticism and experimentation. Popper emphasized that ravens may always be found to justify a theory. Rather, criticism and experimentation should be directed to finding evidence that refutes the theory, a process of falsification. Although theories are never justified, they may be corroborated if they survive genuine efforts of falsification. Popper solves the problem of induction by rejecting data as the foundation of science, induction as the method, and justification and prediction as the objectives.

To clarify the central role that explanations play in knowledge creation, compare the process of induction, introduced above, to Popper’s schema of problem-solving: When a problem is identified, solutions are conjectured; these conjectures are subjected to criticism and experimental tests, in an effort to root out errors in the theorized solutions; this introduces new problems to solve. Contrary to the much-bandied Scientific Method, science is an iterative process of problem-solving, not a strict methodology. This debate in the philosophy of science finds parallels in the future of AI. As we’ve seen, methodology figures prominently in AI and fuels the aspirations of machine learning as “automated science.”

The Anti-Induction Debate

Despite his influence within the philosophy of science, the implications of Popper’s theory are often ignored in computer science. One explanation for this neglect may be that the majority of philosophersremain unconvinced by his arguments. A common criticism is that despite Popper’s efforts to distinguish his concept of corroboration from justification, people need justification as a basis for practical action. (Remember the advice of Russell and Norvig, that AI requires action as well as reasoning.) The psychological need for justification and the intuitive validity of induction impose serious impediments to anti-inductive theories of knowledge creation.

Other aspects of Popper’s philosophy are harder to ignore. He stressed (alongside several notable philosophers) that all knowledge creation is theory-laden; observations are never free of an underlying theory or explanation. Even if you believe the process begins with observations, the act of observing requires a point of view. It may seem observational, “the man is sitting on the bench”, but what caused you to look at the man? Language itself is theory-laden. Terms refer to concepts, which in turn point to other terms, in an infinite regress. What is a bench and sitting and why do they fit together? We make an observation, we don’t take it. Ask data scientists how much time they spend deciding what to observe and what to exclude, or how difficult it is to wrangle the ambiguities of language.

The centrality of explanations in knowledge creation is not a contentious idea, in and of itself. David Deutsch, a staunch Popperian and pioneer of quantum computation, argues that computational theory and epistemology (along with quantum theory and evolution) comprise the Fabric of Reality. Responding to Nelson’s riddle, introduced above, Deutsch frames languages as theories in the service of problem-solving. “One of the most important ways in which languages solve these problems is to embody, implicitly, theories that are uncontroversial and taken for granted, while allowing things that need to be stated or argued about to be expressed succinctly and cleanly.” Welsey Salmon, in his classic book on the subject, asserted, “On one fundamental issue, the consensus has remained intact. Philosophers of very diverse persuasions continue to agree that a fundamental aim of science is to provide explanations of natural phenomena.”

Deutsch cautions that explanation is frequently conflated with prediction, which erodes this consensus. Within AI, the centrality of explanations in knowledge creation is undeniably contentious. A common criticism of machine learning is the lack of interpretability; learners as black box oracles. This problem is an active area of research, but for many, inexplicability is a feature, not a bug, pointing the way to new explanationless methods of scientific discovery. Comparing this process of trial and error to alchemy, the computer scientist Ali Rahimi expressed anguish over “an entire field that’s become a black box.” Is this a leap to a brave new science or the regressive impact of a flawed theory of knowledge? I explored this topic at length in a previous post.

However seductive, the idea of explanationless theories fails in a fairly profound way. Not only is science neatly encapsulated as the pursuit of explanations, it is made possible only through the foundations of explanations. Whether in science or everyday life, people depend on long links of explanations they cannot directly observe or elucidate in any depth. Knowledge creators, whether humans or machines, cannot avoid explanations, even if they wistfully deny them.

But there is a more valid criticism of explanations. If there are anyfunctional gaps in your solution, it bars the way to fully autonomous systems. So if induction is deemed essential to any widget or gear in your machine, the problem of induction may be an acceptable compromise. The need for justification, discussed above, is one perceived gap. A major proponent of Popper, David Miller, highlights some hard questions facing Popper’s theory. “It must nonetheless be conceded that Popper’s deductivism, in contrast to some forms of inductivism, and especially in contrast to Bayesianism, has no extensively developed account of what is usually called decision making under uncertainty and risk.” Another major gap in Popper’s philosophy is in the realm of creativity and discovery, where leaps in imagination lead to startlingly new conjectures and hypotheses. This is an area that Popper viewed as psychological and inherently subjective. Popper concluded (contrary to the title of one of his best known books) there is no logic of scientific discovery.

We’ll return to these questions of prediction, decision-making, discovery and creativity in future posts, as major explorations across the AI landscape. But before the question of Why AI works can be answered, there is one additional aspect of explanations that needs to be considered: If explanations are key to knowledge creation, how do you know your explanations are any good?

Criteria for Good Explanations

Philosophers have categorized many different forms of explanation, such as the degree to which an explanation covers the observations, explanations by analogy, and explanations of purpose rather than cause. In The Beginning of Infinity: Explanations that Transform the World, Deutsch stresses the criterion of variability, “for, whenever it is easy to vary an explanation without changing its predictions, one could just as easily vary it to make different predictions if they were needed.” Deutsch contrasts myths that explain the seasons with the scientific explanation based on the Earth’s axis of rotation and orbit around the sun. The myths are easy to vary: Nordic and Greek examples incorporate widely divergent details of gods, kidnapping, retributions and wars. The myths are essentially interchangeable. The scientific explanation, on the other hand, is hard to vary since each detail of the explanation is intricately connected to the phenomena it explains. Change a detail and the scientific explanation falls apart. An explanation becomes hard to vary when all the details in the theory play a functional role.

In her book, How the Laws of Physics Lie, Nancy Cartwright credits Bas van Fraasen with formulating a particularly pointed criticism of explanations. “Van Fraassen asks, what has explanatory power to do with truth? He offers more a challenge than an argument: show exactly what about the explanatory relationship tends to guarantee that if x explains y and y is true, then x should be true as well. This challenge has an answer in the case of causal explanation, but only in the case of causal explanation.” A causal explanation details the specific chain of cause and effect that leads to an outcome. Causal explanations satisfy van Fraasen’s challenge, as well as Deutsch’s hard-to-vary criterion. All the details in a causal explanation play a functional role.

Good explanations are further constrained by their need to play nice with other good explanations. They must both cover the phenomena they purport to explain, as well as demonstrate an external coherence. You might think you have a groundbreaking new theory of quantum gravity, but it had better play nice with quantum mechanics and general relativity. Good luck with that!

In stark contrast to the glut of data and observations, hard-to-vary explanations are hard to find. This observation has important consequences in AI. First, it illuminates the dreadful conflation between explanations and observations. Observations are plentiful. Bad explanations are plentiful. But good, hard-to-vary explanations are exceedingly rare. Second, there exists criteria for good explanations, which in turn provide the means by which they may be sought and evaluated.

But of course, this is all just talkity talk when you need to create a practical, working solution! Judea Pearl, a pioneer in probabilistic approaches to AI and the development of Bayesian networks, is also a pragmatic AI theorist. In his book on causality, he reminds us of the basic “intuitions” behind AI. “An autonomous intelligent system attempting to build a workable model of its environment cannot rely exclusively on preprogrammed causal knowledge; rather, it must be able to translate direct observations to cause-and-effect relationships.”

You’ll recognize in Pearl’s advice the prevailing methodology of AI, introduced above: We need to build an autonomous intelligent system; pragmatically, it needs to be workable, end-to-end; and that solution must involve induction, an ability to translate direct observations to cause-and-effect relationships, “assuming that the bulk of human knowledge derives from passive observations.” Induction is the principle assumed, intuitive and self-evident, just common sense.

Defying Common Sense: Why Does AI Work?

Knowledge creation defies common sense. Induction isn’t a principle, it’s a Faustian bargain. But like any deal with the devil, it’s a choice made against a paucity of other choices. What does AI look like when it’s based on an anti-inductive theory of knowledge? We can generalize, extrapolate or analogize. We can inch step-by-step towards the best explanation. We can randomly mutate our ideas or throw shit against the wall. But feats of creativity, of bold conjecture, are still largely mysterious.

Wherever this mystery leads, look for explanations at the helm. Explanations figure prominently in the past, present and future of AI. The popular history of AI is that non-inductive approaches necessarily lead to brittle hand crafted systems of knowledge engineering. But on closer examination, explanations have been successfully operationalized in many guises, such as the inductive bias of a learner, the role of background knowledge in “priming the pump” of induction, the importance of sound priors for confronting the “curse of dimensionality”, and the venerable Occam’s razor.

The most imaginative solutions are increasingly focused on explanations. Explanatory factors enable rapid learning using only a handful of observations. Innovations in compositionality, distributed representations, and transfer learning mirror the integration of explanations across multiple domains. (Recall a key criterion of good explanations is their external coherence.) Explanations also figure prominently in the context of automated scientific discovery through heuristics and search strategies to narrow down a large space of possible hypotheses and new ideas. Generative approaches illustrate the power of explanatory models to create data based on explanations of their environments. These visionary approaches leap over the brittle corpses of knowledge engineering in surprising ways.

Explanations impose constraints on knowledge creation and constraints lead to creativity. In their discussion of the role of knowledge in learning, Russell and Norvig explain, “The modern approach is to design agents that already know something and are trying to learn some more. This may not sound like a terrifically deep insight, but it makes quite a difference to the way we design agents. It might also have some relevance to our theories about how science itself works.” Indeed, it’s why science works!

So why does AI work? Inductive systems work because the problem of induction can be managed. In many respects, research and engineering efforts are directed to minimizing the errors that travel with induction. With the incorporation of sound background knowledge and assumptions of uniformity (such as, the future will resemble the past), inductive technologies succeed. And since there is no universal solution, no free lunch, a deep understanding of the knowledge domain is paramount. This competitive advantage is often expressed as privileged access to data, but it’s better understood as the power of explanations to intelligently guide you to the right data.

A new perspective is gained when induction is cast as a problem to navigate rather than a principle unchallenged. While AI may ignore Popper, Popper reads deeply on AI. And just as various schools of philosophy converged on the consensus of explanations, various schools of AI are converging on the foundation of explanations to create goodknowledge.

Special thanks to Kathryn Hume and David Deutsch for their feedback on earlier drafts of this post.

Aaronson, S. (2011). Why philosophers should care about computational complexity. https://arxiv.org/abs/1108.1791

Cartwright, N. (1983). How the laws of physics lie. Clarendon Press.

Cohnitz, D., & Rossberg, M. (2016). Nelson Goodman. https://plato.stanford.edu/archives/win2016/entries/goodman/

Deutsch, D. (1998). The fabric of reality. Penguin.

Deutsch, D. (2011). The beginning of infinity. Allen Lane.

Fetzer, J. (2017). Carl Hempel. https://plato.stanford.edu/archives/fall2017/entries/hempel/

Miller, D. (2014). Some hard questions for critical rationalism. http://www.scielo.org.co/scielo.php?pid=S0124-61272014000100002&script=sci_arttext&tlng=en

Pearl, J. (2009). Causality: models, reasoning, and inference (2nd ed.). Cambridge University Press.

Popper, K. R. (1968/2002). The logic of scientific discovery. Routledge.

Russell, S., & Norvig, P. (2009). Artificial intelligence: a modern approach (3rd ed.). Pearson.

Salmon, W. C. (1989). Four decades of scientific explanation. University of Pittsburgh Press.

Sculley, D., Snoek, J., Wiltschko, A., Rahimi, A. (2018). Winner’s curse? On pace, progress, and empirical rigor. https://openreview.net/forum?id=rJWF0Fywf

Shalev-Shwartz, S., & Ben-David, S. (2014). Understanding machine learning: from theory to algorithms. New York: Cambridge University Press.

Sweeney, P. (2017). Why prediction is the essence of intelligence. https://medium.com/inventing-intelligent-machines/prediction-is-the-essence-of-intelligence-42c786c3e5a9

Turing, A. M. (1950). Computing machinery and intelligence. Mind, 49, 433–460.

Vickers, J. (2014). The problem of induction. https://plato.stanford.edu/archives/spr2018/entries/induction-problem/

Wolpert, D., & Macready, W. (1997). No free lunch theorems for optimization. IEEE transactions on evolutionary computation, 1(1), 67–82. doi:10.1109/4235.585893